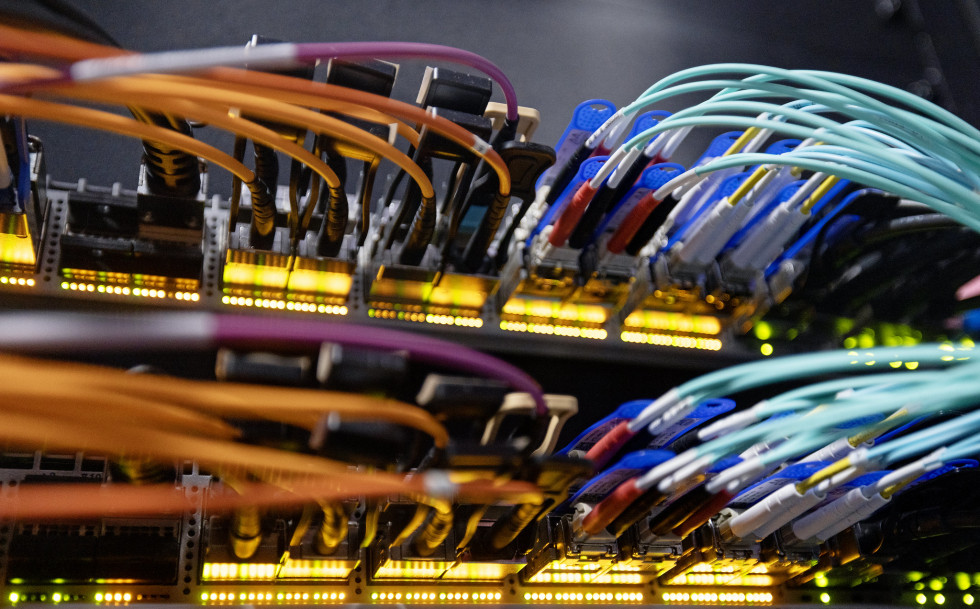

Jefferson Lab's newest cluster computer is '24s' - seen here in the lab's Data Center. Jefferson Lab photos/Aileen Devlin

Jefferson Lab’s new 24s computing cluster comes online for nuclear and particle physics

NEWPORT NEWS, VA – The latest addition to the computational arsenal of the U.S. Department of Energy’s Thomas Jefferson National Accelerator Facility is an extraordinary machine with the admittedly ordinary name of “24s.” In a world where the fastest of supercomputers have such names as Frontier, Aurora, El Capitan, Jupiter, Blueback, JEDI and Eagle, a name like 24s might seem out of place.

“It may not be the most whimsical of names,” Robert Edwards said. “There are all these computers out there with whimsical names, and the problem is that if you want to use another lab’s computer you have to start thinking, ‘Which whimsical name is that?’”

Edwards, a principal staff scientist and deputy director of Jefferson Lab’s Center for Theoretical and Computational Physics, is working with Amitoj Singh, a computer scientist and contractor program manager for the Jefferson Lab LQCD Nuclear Physics program in the lab’s Computational Sciences and Technology division, to put 24s to work unlocking the mysteries of the nucleus of the atom. The new computer’s name is a combination of the year of acquisition — 24 — and “s,” for the Intel CPU codename, which is “sapphire rapids.”

Edwards and Singh are using an approach known as lattice Quantum Chromodynamics, or LQCD. The partnering of advanced physics theory with computational power is a common approach in LQCD research. Edwards noted that the developments that led to the acquisition of 24s — and his own involvement with Jefferson Lab – had its genesis in a Science and Technology Review in the late 1990s.

“Back then, the committee said that as you're a nuclear physics lab, you should have a computation component that would try to predict what was going to be seen in the experiments. That sort of thing was still fairly new,” Edwards said. “And so, two physicists were hired — David Richards and myself — to jumpstart this program and work with the computing side of the lab.”

As a member of the Jefferson Lab Theory Center, Edwards brought considerable computational experience. At Florida State University, he helped to design a machine that won the Association for Computing Machinery’s Gordon Bell Prize, awarded annually to recognize significant achievement in high-performance computing.

“What we want to do is to discover the limits of the Standard Model,” Edwards said, referring to physicists’ theoretical blueprint of the elementary particles and interactions that make up matter. “We want to answer basic questions about the structure of matter, including mass and excitations of hadrons and nuclei.”

LQCD is a route toward an important waypoint on this quest: a fuller understanding of the structure of the proton, the subatomic particle that itself is assembled from elementary particles known as quarks and gluons.

The assembly of quarks and gluons in the proton (as well as its companion nuclear hadron, the neutron) is mediated by the strong interaction, one of the four essential forces of nature, along with gravity, electromagnetism and an interaction seen in particle decay known as the weak force. Edwards said the search goes back at least a century to the observation of photon-sparked excitations in hydrogen atoms.

“This was the origin of quantum mechanics,” he said. “One of the goals of the experiments we have here is to try and understand fundamental symmetries of nature and their violations. We think there's stuff beyond the Standard Model.”

The idea at Jefferson Lab is to probe the Standard Model and beyond through documenting evidence of those fundamental symmetries of nature and violations thereof. Direct observation at high energies of nuclear goings-on may be out of reach for some time, so researchers look for evidence in the residue of reactions of particle collisions, much as detectives may suss out the details of an auto accident by looking at the damaged vehicle.

Systems Administrator David Rackley, left, works on the high Performance Computing (HPC) nodes for the Lattice Quantum Chromodynamics (LQCD) project in the Data Center at Jefferson Lab in Newport News, Va. Jefferson Lab photos/Aileen Devlin

LQCD, also called Lattice QCD, is designed to predict what the researchers who are using particle accelerators and colliders should be looking for and where.

It requires a high-performing machine to make these calculations. The 24s cluster at Jefferson Lab was funded by the Nuclear and Particle Physics LQCD Computing Initiative of DOE’s Office of Nuclear Physics with a $1.3 million budget.

“The acquisition of 24s began with the writing of a document called an RFP, or request for proposal,” Singh said. “That document has specifications and various configurations for the machine in it.”

Singh and Edwards conferred extensively about the specs for the machine that would become 24s, and they carried their thoughts into meetings with a number of committees.

“I try my best to understand the needs of Lattice QCD,” Singh said, “exactly what type of machine would work or what type of hardware is best, because there's hundreds of hardware options available in the market today. But what is best suited for Lattice QCD?”

Singh and Edwards cherry-picked the best available hardware options, building a portfolio of what the best Jefferson Lab LQCD machine would look like. After careful consideration and consultation, Jefferson Lab sought proposals from several competitors, Singh said.

“And we chose the best bid,” he added, “which was not awarded on the lowest price, but on the best value.”

The winner of the best-value review for 24s was an Intel 32-core machine.

Singh says that computer scientists classify 24s as a ‘cluster,’ essentially an assembly of servers that work as a single computer much like the eight pistons of a V8 engine. It processes information at 78 TeraFLOPS.

“That’s 78 trillion floating point operations per second. And there is a total of 100 servers,” Singh explained. “Each server is an Intel-based 32-core machine with one terabyte of memory at the fastest memory bandwidth you can buy.”

“The CPUs and the memory are a big support for Lattice QCD. The memory bandwidth is very, very important,” Singh explained. “When I say, ‘memory bandwidth,’ it means how fast can the CPU read data from memory. So, we went with the fastest memory bandwidth machine.”

He said 24s’ memory bandwidth makes the machine capable of 4,800 million transfers per second per server.

Stationed underneath the floor of the Jefferson Lab Data Center is high-speed data transfer fabric used for collecting raw experimental data at Jefferson Lab in Newport News, Va. Jefferson Lab photos/AIleen Devlin

“So basically, you can feed the data to your operations really, really fast,” Singh noted. “In early 2024, that's the fastest memory you could buy”

Singh described another virtue of the 24s: network speed. Many LQCD jobs or applications don't run on a single server, he noted.

“The way you make your cluster work as a supercomputer is by spreading your workload across different servers, but then those workloads need to communicate with each other,” he explained. “And once you go over the network, you take a performance hit called latency. So, we opted to buy the fastest network possible.”

He explained that Jefferson Lab selected InfiniBand as the network backbone for the machine, an option that offers higher speed — 400 gigabits per second — and lower latency than the more common Ethernet.

Singh and Edwards agree that the acquisition of 24s was a well-considered decision involving not only a collaboration among those two but also input from a number of committee members.

“When we finally are ready to make a decision, that is again put to the various committees, so we end up putting a machine on the floor that works,” Singh said.

He noted that LQCD calculations are time-consuming, even on advanced computation machinery such as 24s.

“And a lot of the scientific experiments out in the ecosystem that the DOE funds are waiting for answers that are going to come out of these Lattice QCD calculations,” he said. “These calculations running on machines similar to 24s, provide answers which the experiments can then use as a guiding principle — or they can look at it like a blueprint: That's what the theory says; let's see if it matches with the experiment.”

Further Reading

Nuclear Physics Gets a Boost for High-Performance Computing

Reducing Redundancy to Accelerate Complicated Computations

Nuclear Physics Data Demand More Powerful Processing

Jefferson Lab’s Newest Cluster Makes Top500 List of Fastest Computers

Small Supercomputer Makes Big List

Jefferson Lab Boasts Virginia’s Fastest Computer

By Joe McClain

Contact: Kandice Carter, Jefferson Lab Communications Office, kcarter@Jefferson Lab.org